Louis van Harten and Ivana Isgum present keynote lecture at the Amsterdam Reproduction & Development retreat 2021

November 4, 2021

AI for Health Decision-Making

November 28, 2021Bob de Vos made the code for Deep Learning Image Registration (DLIR) available on GitHub.

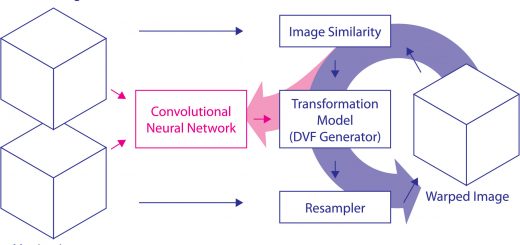

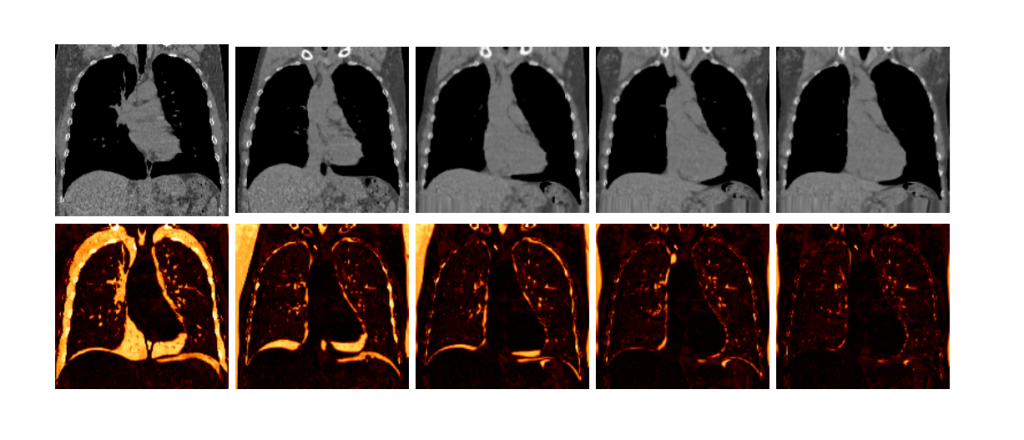

DLIR framework allows for unsupervised affine and deformable image registration. ConvNets are trained for image registration by exploiting image similarity analogous to conventional intensity-based image registration. After a ConvNet has been trained with the DLIR framework, it can be used to register pairs of unseen images in one shot. We propose flexible ConvNets designs for affine image registration and for deformable image registration. By stacking multiple of these ConvNets into a larger architecture, we are able to perform coarse-to-fine image registration.

The approach is described in the paper titled A deep learning framework for unsupervised affine and deformable image registration by Bob B. de Vos, Floris F. Berendsen, Max A. Viergever, Hessam Sokooti, Marius Staring, Ivana Išgum published in Medical Image Analysis [1].

The code is available at .https://github.com/BDdeVos/TorchIR.

It contains several techniques presented in our studies[1,2]. It also contains a tutorial that shows cases its features using computationally light-weight image data (handwritten digits). The code is under development, but can already be applied in your research projects:

[1] Bob D. de Vos, Floris F. Berendsen, Max A. Viergever, Hessam Sokooti, Marius Staring and Ivana Išgum “A deep learning framework for unsupervised affine and deformable image registration,” Medical image analysis, vol. 52, pp. 128-143, Feb. 2019, doi: 10.1016/j.media.2018.11.010

[2] Bob D. de Vos, Floris F. Berendsen, Max A. Viergever, Marius Staring and Ivana Išgum, “End-to-end unsupervised deformable image registration with a convolutional neural network,” in Deep learning in medical image analysis and multimodal learning for clinical decision support. Springer, Cham, 2017. p. 204-212, doi: 10.1007/978-3-319-67558-9_24