Eye Lab at ARVO 2020

May 7, 2020

Eye Lab’s research on AI explainability highlighted by Radboudumc

June 24, 2020Our paper ‘Iterative Augmentation of Visual Evidence for Weakly-Supervised Lesion Localization in Deep Interpretability Frameworks: Application to Color Fundus Images’, by González-Gonzalo et al, has been published in IEEE Transactions on Medical Imaging. In this paper, we present a novel deep visualization method to generate interpretability of deep learning classification tasks in medical imaging.

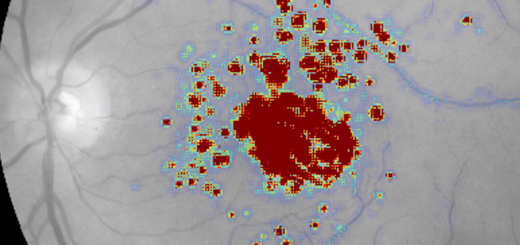

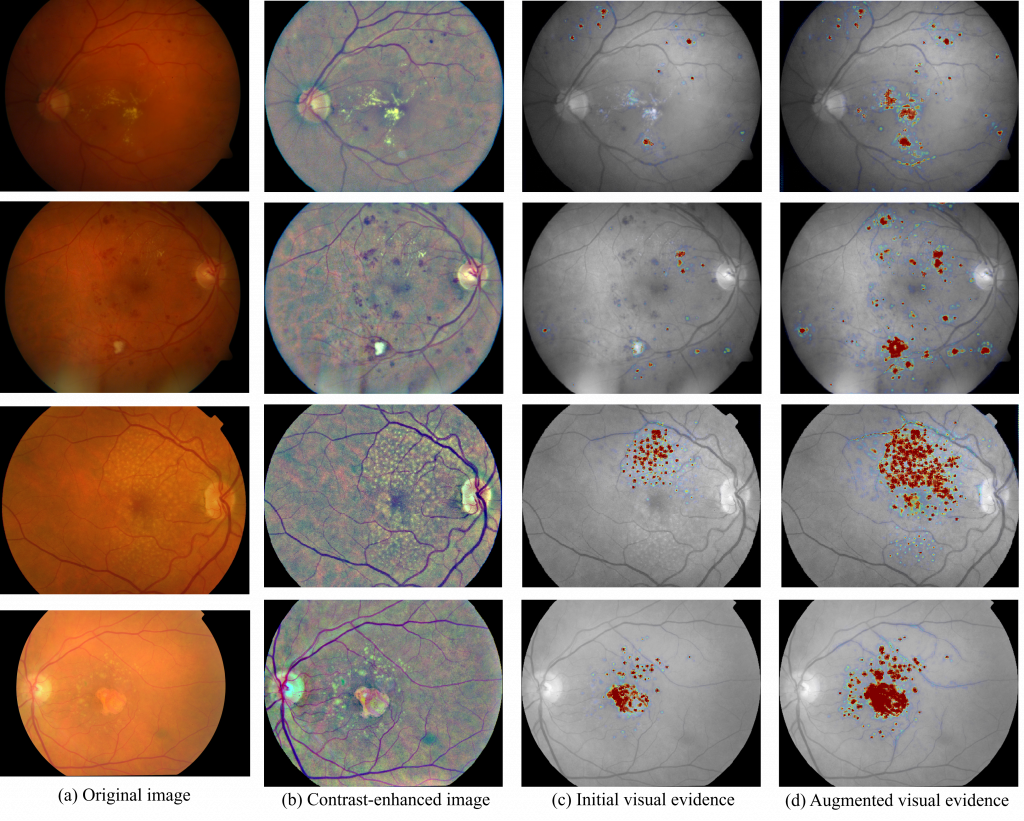

Deep learning systems in medical imaging have shown to provide high-performing approaches for diverse tasks in healthcare. Nevertheless, DL systems are often referred to as “black boxes” due to the lack of interpretability of their predictions. This is specifically problematic in healthcare applications, where it hinders experts’ trust and the integration of these systems in clinical settings. In this paper, Cristina González-Gonzalo highlights the importance of explainable artificial intelligence in healthcare. The proposed method iteratively unveils abnormalities in a weakly-supervised manner and yields augmented visual evidence of the system’s predictions, including less discriminative areas that should also be considered for the final diagnosis. We show that augmented visual evidence highlights the biomarkers considered by clinical experts for diagnosis, improves the final performance for weakly-supervised lesion localization, and can be integrated in different interpretability frameworks. We focus on the automated grading in color fundus images of diabetic retinopathy and age-related macular degeneration, leading causes of blindness worldwide.

Examples of visual evidence for automated grading of DR and AMD in CF images. The figure shows the initial visual evidence and the augmented visual evidence, generated after an iterative process that combines visual attribution and selective inpainting. The augmented visual evidence maps highlight less discriminative areas that might also be relevant for the final diagnosis, including abnormalities of different types, shapes and sizes, and improving the system’s performance of for weakly-supervised lesion localization. This is shown for images correctly classified as moderate non-proliferative DR (first row), severe non-proliferative DR (second row), intermediate AMD (third row), and advanced AMD (fourth row).