Opening of AI for Oncology Lab on 24th June 2021

June 10, 2021

Ivana Isgum presents at the Thoracic Cath conference at Erasmus MC

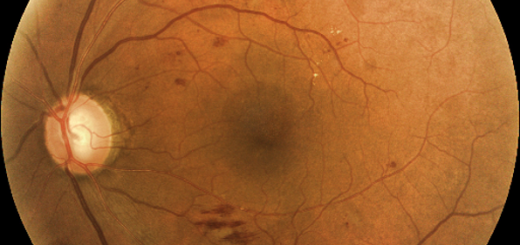

June 22, 2021Our paper ‘Adversarial Attack Vulnerability of Medical Image Analysis Systems: Unexplored Factors’, by Bortsova, González-Gonzalo, Wetstein et al. is now available online in Medical Image Analysis. In this paper, Cristina González-Gonzalo (Eye Lab, qurAI Group, UvA / Diagnostic Image Analysis Group, Radboudumc) worked in collaboration with Gerda Bortsova (Biomedical Imaging Group Rotterdam, Erasmus MC) and Suzanne Wetstein (Medical Image Analysis Group, TU Eindhoven) to study previously unexplored factors affecting adversarial attack vulnerability of deep learning medical image analysis (MedIA) systems in three domains: ophthalmology, radiology, and pathology.

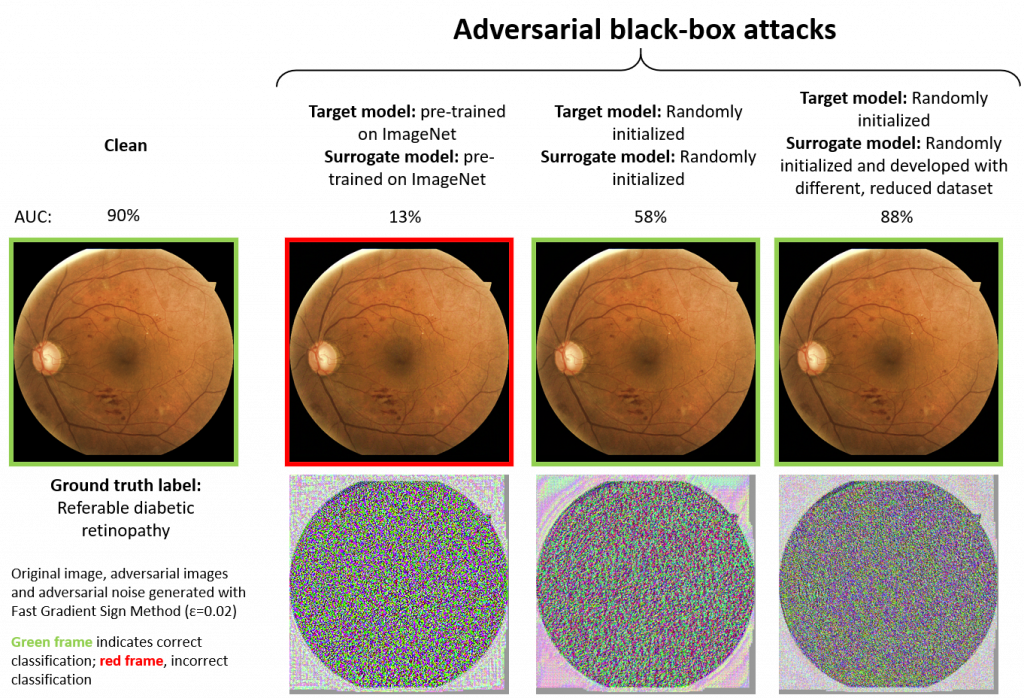

We focus on adversarial black-box settings, in which the attacker does not have full access to the target model and usually uses another model, commonly referred to as surrogate model, to craft adversarial examples that are then transferred to the target model. We consider this to be the most realistic scenario for MedIA systems.

Our experiments show that pre-training on ImageNet may dramatically increase the transferability of adversarial examples, even when the target and surrogate’s architectures are different: the larger the performance gain using pre-training, the larger the transferability. Differences in the development data between target and surrogate models considerably decrease the performance of the attack; this decrease is further amplified by difference in the model architecture. We believe these factors should be considered when developing security-critical MedIA systems planned to be deployed in clinical practice.